This article provides details regarding how data provided by you to the Azure OpenAI service is processed, used, and stored. Azure OpenAI stores and processes data to provide the service and to monitor for uses that violate the applicable product terms. Please also see the Microsoft Products and Services Data Protection Addendum, which governs data processing by the Azure OpenAI Service except as otherwise provided in the applicable Product Terms.

Important

Your prompts (inputs) and completions (outputs), your embeddings, and your training data:

- are NOT available to other customers.

- are NOT available to OpenAI.

- are NOT used to improve OpenAI models.

- are NOT used to improve any Microsoft or 3rd party products or services.

- are NOT used for automatically improving Azure OpenAI models for your use in your resource (The models are stateless, unless you explicitly fine-tune models with your training data).

- Your fine-tuned Azure OpenAI models are available exclusively for your use.

The Azure OpenAI Service is fully controlled by Microsoft; Microsoft hosts the OpenAI models in Microsoft’s Azure environment and the Service does NOT interact with any services operated by OpenAI (e.g. ChatGPT, or the OpenAI API).

What data does the Azure OpenAI Service process?

Azure OpenAI processes the following types of data:

- Prompts and generated content. Prompts are submitted by the user, and content is generated by the service, via the completions, chat completions, images and embeddings operations.

- Augmented data included with prompts. When using the “on your data” feature, the service retrieves relevant data from a configured data store and augments the prompt to produce generations that are grounded with your data.

- Training & validation data. You can provide your own training data consisting of prompt-completion pairs for the purposes of fine-tuning an OpenAI model.

How does the Azure OpenAI Service process data?

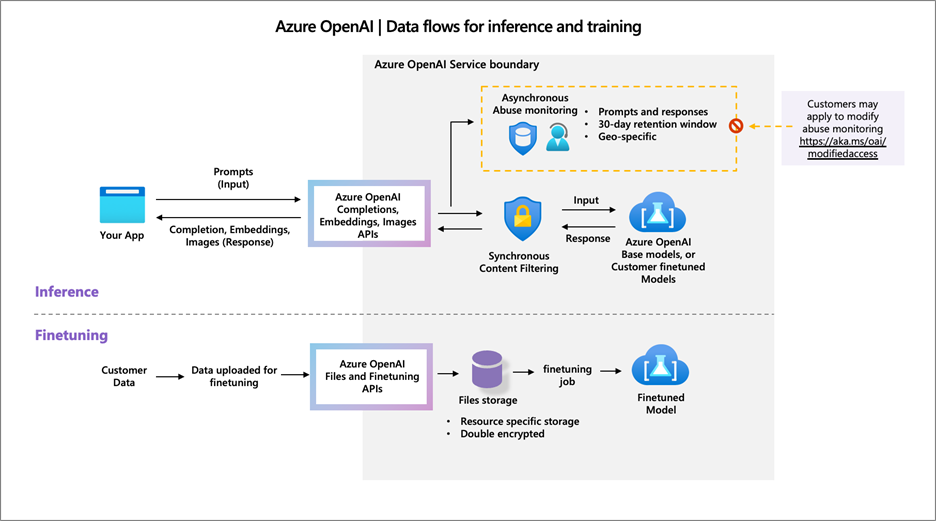

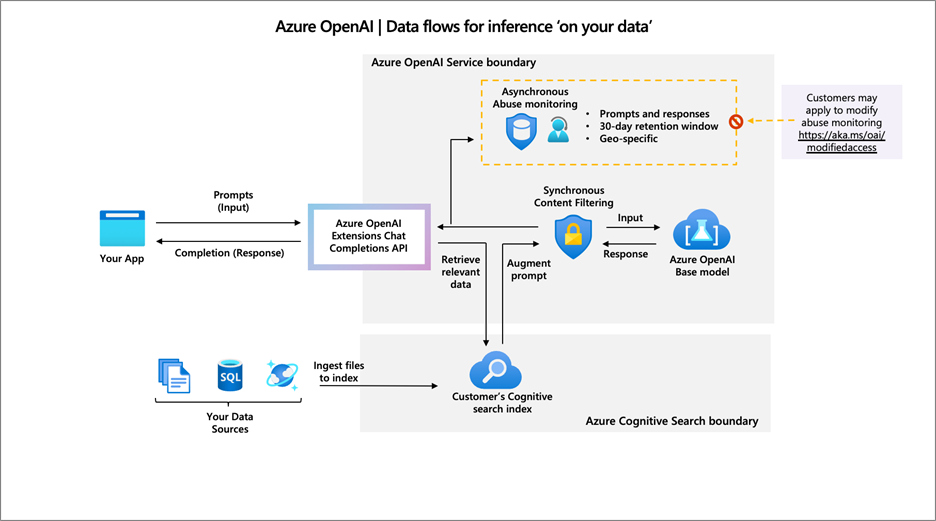

The diagram below illustrates how your data is processed. This diagram covers three different types of processing:

- How the Azure OpenAI Service processes your prompts to generate content (including when additional data from a connected data source is added to a prompt using Azure OpenAI on your data).

- How the Azure OpenAI Service creates a fine-tuned (custom) model with your training data.

- How the Azure OpenAI Service and Microsoft personnel analyze prompts, completions and images for harmful content and for patterns suggesting the use of the service in a manner that violates the Code of Conduct or other applicable product terms

As depicted in the diagram above, managed customers may apply to modify abuse monitoring.

Generating completions, images or embeddings

Models (base or fine-tuned) deployed in your resource process your input prompts and generate responses with text, images or embeddings. The service is configured to synchronously evaluate the prompt and completion data in real time to check for harmful content types and stops generating content that exceeds the configured thresholds. Learn more at Azure OpenAI Service content filtering.

The models are stateless: no prompts or generations are stored in the model. Additionally, prompts and generations are not used to train, retrain, or improve the base models.

Augmenting prompts with data retrieved from your data sources to “ground” the generated results

The Azure OpenAI “on your data” feature lets you connect data sources to ground the generated results with your data. The data remains stored in the data source and location you designate. No data is copied into the Azure OpenAI service. When a user prompt is received, the service retrieves relevant data from the connected data source and augments the prompt. The model processes this augmented prompt and the generated content is returned as described above.

As depicted in the diagram above, managed customers may apply to modify abuse monitoring.

Creating a customized (fine-tuned) model with your data:

Customers can upload their training data to the service to fine tune a model. Uploaded training data is stored in the Azure OpenAI resource in the customer’s Azure tenant. Training data and fine-tuned models:

- Are available exclusively for use by the customer.

- Are stored within the same region as the Azure OpenAI resource.

- Can be double encrypted at rest (by default with Microsoft’s AES-256 encryption and optionally with a customer managed key).

- Can be deleted by the customer at any time.

Training data uploaded for fine-tuning is not used to train, retrain, or improve any Microsoft or 3rd party base models.

Preventing abuse and harmful content generation

To reduce the risk of harmful use of the Azure OpenAI Service, the Azure OpenAI Service includes both content filtering and abuse monitoring features. To learn more about content filtering, see Azure OpenAI Service content filtering. To learn more about abuse monitoring, see abuse monitoring.

Content filtering occurs synchronously as the service processes prompts to generate content as described above and here. No prompts or generated results are stored in the content classifier models, and prompts and results are not used to train, retrain, or improve the classifier models.

Azure OpenAI abuse monitoring detects and mitigates instances of recurring content and/or behaviors that suggest use of the service in a manner that may violate the code of conduct or other applicable product terms. To detect and mitigate abuse, Azure OpenAI stores all prompts and generated content securely for up to thirty (30) days. (No prompts or completions are stored if the customer is approved for and elects to configure abuse monitoring off, as described below.)

The data store where prompts and completions are stored is logically separated by customer resource (each request includes the resource ID of the customer’s Azure OpenAI resource). A separate data store is located in each region in which the Azure OpenAI Service is available, and a customer’s prompts and generated content are stored in the Azure region where the customer’s Azure OpenAI service resource is deployed, within the Azure OpenAI service boundary. Human reviewers assessing potential abuse can access prompts and completions data only when that data has been flagged by the abuse monitoring system. The human reviewers are authorized Microsoft employees who access the data via point wise queries using request IDs, Secure Access Workstations (SAWs), and Just-In-Time (JIT) request approval granted by team managers. For Azure OpenAI Service deployed in the European Economic Area, the authorized Microsoft employees are located in the European Economic Area.